The necessity and difficulty of integrating video with radar

StoryMarch 03, 2015

Radar – essential to virtually all critical military environments and a mainstay in almost all vessels – has become more difficult to integrate with actual live video feeds from various camera interfaces. This situation arises primarily due to the complexity of properly routing and managing the various feeds. However, designers must overcome this complexity because the live video is so vital in complementing the radar data; not all information can be relayed with radar alone.

Radar and cameras

Radar has been a powerful tool in the military environment, but it still can’t tell the full story in a combat situation. It is now standard in aircraft, ground vehicles, submarines, and sea craft, but video has been more difficult to integrate. Video is a necessary addition, however, even if it is to simply supplement the radar data and better interpret targets of interest. With an integrated system, the radar data can be directly correlated with the live video feed to pinpoint the target of interest and then analyze and track that target.

Without this live camera data, full analysis and verification is difficult. Lack of live video unfortunately increases the possibility of false positives and delayed response times.

Difficulties of raw video

Modern digital radar data is smaller than live video feeds and is typically sent in a standard format (like ASTERIX CAT 240) over Ethernet as user datagram protocol (UDP) packets. This data is then retrieved onboard the vessel – typically via a rugged system like VME, VPX, or Compact PCI embedded systems – because most military applications have strict environmental requirements. The data can then be displayed on this local embedded system or sent off vessel to other networked computers at various other interested locations, like control/ground stations.

Raw live camera video doesn’t have the luxury of this simple setup, however, due to the size of the resulting data and the inability to effectively send this raw data over Ethernet. Modern Ethernet maxes out at 1 Gbps, while 1080p30 raw video runs at 1.49 Gbps. Even at a reduced resolution, frame rate, and chroma subsampling, raw video will still take up most if not all of the Ethernet bandwidth, essentially clogging the network. Therefore, the only way to get raw video to all interested parties is to hardwire it directly to each party.

Hardwiring creates its own complexities, however: While serial digital interface (SDI) is typically used in modern environments because of its digital format and ability to be transmitted longer distances over fiber on a single coax, the video still has to be hardwired to each individual interested party. This setup involves not only having to run the actual wire to all parties (and in turn limiting the number allowed to view), but also splitting the single camera feed into multiple wires.

Again, this assumes only a single camera feed. Typically, cameras are mounted at all critical angles of the vessel, with each of these feeds needing to be split and routed to every interested party. An additional switch is necessary to allow each party to select which feed to be displayed at any given time. Needless to say, this approach becomes a mess of wires very quickly, all while limiting further expansion.

Back to Ethernet

As mentioned previously, the ability to send raw video is unworkable due to its large data size. Fortunately, there is a way to send video over Ethernet: by compressing and streaming the video. Thanks to the current compression standard, H.264, previous issues of latency and quality are no longer as relevant. H.264 is highly configurable to produce a near-1:1 quality factor; hardware accelerated encoders ensure that this encoding is done in a timely manner.

Standards such as RTP & MPEG2TS enable the live video to be encapsulated with other data (like audio and metadata) and then sequenced correctly at the other end with minimal overhead.

This UDP Multicast system also is stateless, meaning that any number of computers on the network can access and display the stream without any extra overhead. The system then becomes easy to scale for future additional viewers and allows the stream to be sent off remotely.

How to compress

Since streaming compressed video is the easiest way to distribute live camera feeds throughout a large environment, the question becomes: How do we properly compress the video in a naval environment? Hardware acceleration – necessary on both the encoder and decoder side of the pipeline –ensures a consistently stable performance and is more efficient than using software codecs that require a lot of CPU usage. Efficiency is the key in this case since encoding does inherently add latency, but if a proper hardware encoder is selected, this should not be an issue, with most encoders staying around 50-150 ms of latency.

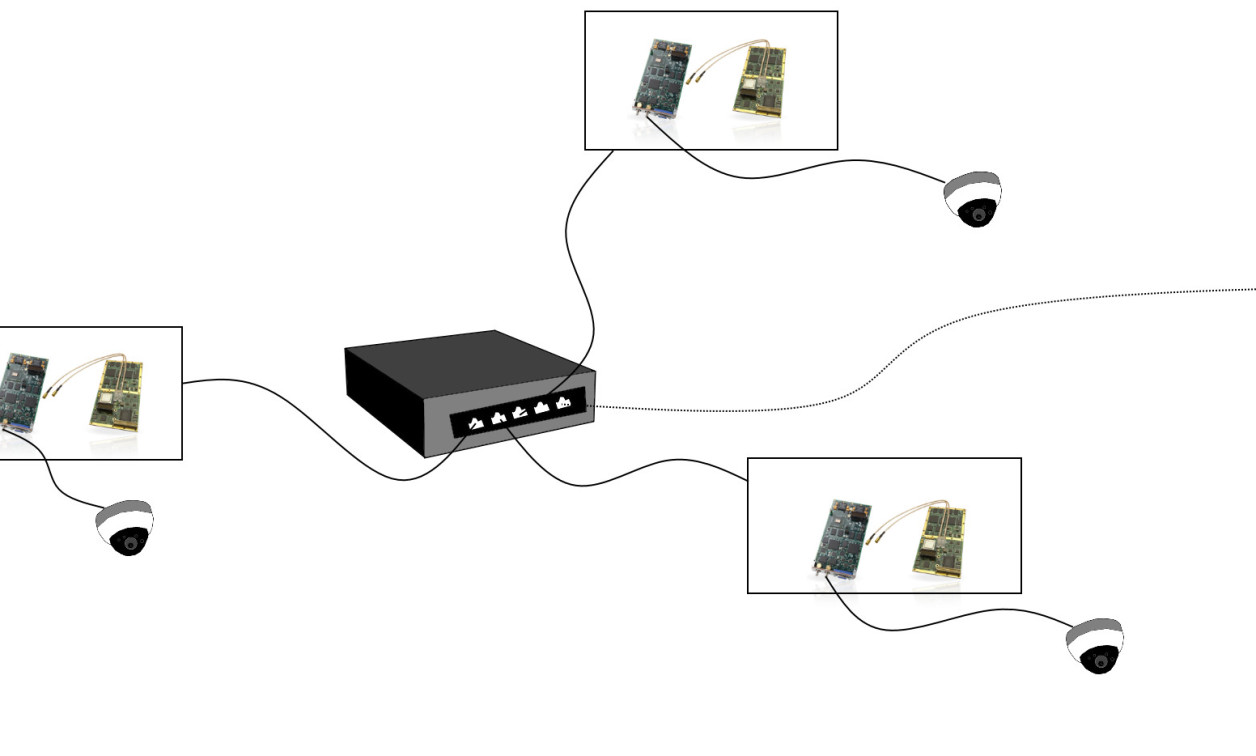

An example environment for this would be a naval vessel outfitted with six SDI cameras in various locations. (Figure 1.)Each of these cameras has an embedded VME single-board computer (SBC) close by running an SDI encoder card like the Tech Source VC100x or VC102x. This card takes in the SDI video and encodes it to H.264 before passing it off to the host PC. This host PC can then show the stream locally and forward the stream to all other interested parties.

|

(Click graphic to zoom)

Added value

Once the video is compressed and sent out over Ethernet, the viewing parties must capture the Ethernet packets and decode the video before displaying. Other beneficial features are added if the selected radar suite itself can handle the decoding of the video. Applications such as Cambridge Pixel’s SPx software already has support for decoding and displaying these H.264 feeds and further more can directly integrate and correlate the video feed with the radar data and also enable the user to set up the H.264 video stream parameters on the Tech Source Condor VC100x or VC102x.

For example, when a target of interest is identified in the radar view, the software can control the nearest camera via a Pelco-D or similar interface to show the target of interest on video (Slew to Cue). The position of the camera is then controlled by the observation of the target by the radar. This allows the user to instantly analyze what has been detected on radar and if it is truly a target of interest or simply noise. Going further, the user may then want to process the camera video to track the target from the real-time video signal (video tracking). Optimum detection and assessment arises with the integration of radar, which provides long-range, 360-degree coverage, with cameras that support visual assessment and identification.

Furthermore, applications can typically add various other beneficial features –including video feeds like overlays and target/motion tracking – by using a dedicated GPU to analyze the video feed. Using a dedicated GPU will not only ensure performance of the radar applications, but can also be used to speed up the decoding of the H.264 video feed to further reduce latency of the feed. Some systems have on-board graphics available, but only a dedicated GPU can ensure strong performance. With the data size now much more manageable, the video and/or radar display can also be recorded to a local storage device for future viewing and analysis.

Integration is worth it

Radar will always be a necessary part of the naval surveillance environment, but video can be a vital supplement to further reduce potential false positives in target acquisition. Video can be difficult to implement, but compressing and distributing the video over an already pre-existing Ethernet network saves unnecessary cabling and allows the video to be accessed at any location. All this data working together provides the user with true situational awareness.

Christopher Fadeley is a Software Engineer and Project Manager at Tech Source. He holds a B. Eng in Computer Engineering: Digital Arts & Sciences from the University of Florida. Fadeley specializes in military embedded systems software and video encoding/streaming solutions.

Tech Source

chrisf@techsource.com

Figure 1: Streaming compressed video in a naval-ship environment can be accomplished by posting six SDI cameras in various locations, each with an embedded VME SBC running an SDI encoder card. The card accepts the SDI video, encodes it to H.264, then passes it off to the PC host. The host PC can both show the stream locally and forward it to other interested parties.